PROBLEM:

What is a Product Owner?

SOLUTION:

Here is a short animated video on the role of the product owner. I thought it was pretty cool so I figured I’d share it. It’s only a few minutes. It’s worth checking out…

http://tv.ssw.com/3244/what-is-a-product-owner

If you find this useful, you are welcome to press one of the ads in this page.. Thanks!

Sunday, September 29, 2013

Thursday, August 29, 2013

TFS 2010 Power Tools -- Error 1001 in Windows 8

PROBLEM:

When trying to install "Team Foundation Server Power Tools December 2011" in Windows 8, you get the following error: "Microsoft Team Foundation Server 2010 Power Tools -- Error 1001". Also, you cannot see "Process Editor" menu in Visual Studio "Tools" menu.

You already installed (by this order):

1. Visual Studio 2010

2. Visual Studio 2010 SP1

SOLUTION:

Choose custom install and do not install "Best Practice Analyzer"

If you find this useful, you are welcome to press one of the ads in this page.. Thanks!

When trying to install "Team Foundation Server Power Tools December 2011" in Windows 8, you get the following error: "Microsoft Team Foundation Server 2010 Power Tools -- Error 1001". Also, you cannot see "Process Editor" menu in Visual Studio "Tools" menu.

You already installed (by this order):

1. Visual Studio 2010

2. Visual Studio 2010 SP1

SOLUTION:

Choose custom install and do not install "Best Practice Analyzer"

If you find this useful, you are welcome to press one of the ads in this page.. Thanks!

Tuesday, August 13, 2013

Use MongoDB in Windows Azure

PROBLEM:

You want to use MongoDB in Windows Azure

SOLUTION:

See the following links:

If you find this useful, you are welcome to press one of the ads in this page.. Thanks!

You want to use MongoDB in Windows Azure

SOLUTION:

See the following links:

If you find this useful, you are welcome to press one of the ads in this page.. Thanks!

Tuesday, August 6, 2013

Performance best practices for very large tables

PROBLEM:

What are the performance best practices for very large tables?

SOLUTION:

Here is a summary of best practices for querying, monitoring, and performance tuning for your very large tables:

If you find this useful, you are welcome to press one of the ads in this page.. Thanks!

What are the performance best practices for very large tables?

SOLUTION:

Here is a summary of best practices for querying, monitoring, and performance tuning for your very large tables:

- Pay attention to both the results of a query and the execution plan designed to return those results. Make it a routine exercise to scan query plans to become more familiar with how SQL Server resolves queries.

- De-normalize your schema where possible to reduce the need to join very large tables.

- Use table partitioning for your largest tables, and be sure when querying that the optimizer uses partition elimination whenever possible.

- Align secondary indexes with the same table partition function to reduce partitioned table maintenance.

- Consider using a heap instead of a clustered index, especially with a table that partially mimics a clustered index via its partition function.

- Design indexes to be covering for larger recurring queries whenever possible, especially when creating a filtered (i.e., partial) index.

- Use an indexed view as an alternative to a denormalized table or to materialize an aggregated dataset. The more static the underlying data, the less overhead will be required to maintain the indexed views.

- Use summary tables with automatic (scheduled) updates as an alternative to indexed views, especially when there is less significance in the currency of the data.

- For query monitoring, focus on the quantitative measures of Disk I/O, CPU Time, and Query Duration.

- Use the features of the Management Data Warehouse for overall server monitoring, and use the SQL Trace data collection set for query monitoring.

- Focus your tuning efforts on SQL code that is repeatedly run, either as a stored procedure or a SQL script, and that consumes the most server resources. Data warehouse applications tend to place the greatest pressure on Disk I/O.

- For tuning and management of large queries, consider saving historical copies of XML execution plans to disk for later reference.

If you find this useful, you are welcome to press one of the ads in this page.. Thanks!

Tuesday, June 11, 2013

MongoDB Hardware Tips

PROBLEM:

You need some hardware tips for your MongoDB installation:

See this: https://www.youtube.com/watch?v=19VZJ9H3LmQ

If you find this useful, you are welcome to press one of the ads in this page.. Thanks!

You need some hardware tips for your MongoDB installation:

- Buy $1k 1000 servers or $10k 100 servers?

- Fast CPU vs. more CPU cores?

- More RAM is good?

- Virtualization is ok?

- SSD is good?

- Disable NUMA (Non-Uniform Access Memory) in BIOS?

- File system settings?

See this: https://www.youtube.com/watch?v=19VZJ9H3LmQ

If you find this useful, you are welcome to press one of the ads in this page.. Thanks!

Sunday, June 9, 2013

Step by step guide for MongoDB sharding

PROBLEM:

What is a MongoDB sharded cluster?

SOLUTION:

While sharding is a powerful and compelling feature, it comes with significant infrastructure requirements and some limited complexity costs. As a result, use sharding only as necessary and when indicated by actual operational requirements.

Here is a step by step guide for MongoDB sharding:

Introduction to Sharding

Sharding Setup Demo

The config database

Setup Part 2 Adding the Initial Shards

Enabling sharding for a collection

Working with a sharded collection

Choosing shard keys

Process and Machine Layout

Bulk inserts and pre-splitting

Further tips and best practices

If you find this useful, you are welcome to press one of the ads in this page.. Thanks!

Tuesday, May 28, 2013

MongoDB performance tuning, indexes, explain plan, hints and profiler

PROBLEM:

What you need to know for MongoDB performance tuning?

SOLUTION:

The following links will cover performance tuning, obviously a key aspect of database administration. You can find there how to optimize the performance of operations against a MongoDB database, including both queries and write operations. Indexes of course play a key component in many cases; thus you can find also a talk about MongoDB indexes in detail. You can find there also other performance related features including the explain plan and hint commands and the profiler.

Indexes in MongoDB

https://www.youtube.com/watch?v=a7TrHP1C6qQ

Indexes types

https://www.youtube.com/watch?v=pyoOGhGDoj8

Covered Indexes

https://www.youtube.com/watch?v=boAkBnMUBnw

Hints

https://www.youtube.com/watch?v=oaTm0Kftit8

Read vs. Writes

https://www.youtube.com/watch?v=USDbDotmums

Current Operations and Kill Operations

https://www.youtube.com/watch?v=i7XEKAtRS_M

Profiler

https://www.youtube.com/watch?v=MzLmI8FNB94

Mongostat & Mongotop

https://www.youtube.com/watch?v=fEgl1DT_lDA

If you find this solution useful, you are welcome to press one of the ads in this page.. Thanks!

What you need to know for MongoDB performance tuning?

SOLUTION:

The following links will cover performance tuning, obviously a key aspect of database administration. You can find there how to optimize the performance of operations against a MongoDB database, including both queries and write operations. Indexes of course play a key component in many cases; thus you can find also a talk about MongoDB indexes in detail. You can find there also other performance related features including the explain plan and hint commands and the profiler.

Indexes in MongoDB

https://www.youtube.com/watch?v=a7TrHP1C6qQ

Indexes types

https://www.youtube.com/watch?v=pyoOGhGDoj8

Covered Indexes

https://www.youtube.com/watch?v=boAkBnMUBnw

Hints

https://www.youtube.com/watch?v=oaTm0Kftit8

Read vs. Writes

https://www.youtube.com/watch?v=USDbDotmums

Current Operations and Kill Operations

https://www.youtube.com/watch?v=i7XEKAtRS_M

Profiler

https://www.youtube.com/watch?v=MzLmI8FNB94

Mongostat & Mongotop

https://www.youtube.com/watch?v=fEgl1DT_lDA

If you find this solution useful, you are welcome to press one of the ads in this page.. Thanks!

Wednesday, April 10, 2013

Optimizing MongoDB

PROBLEM:

How to optimize your MongoDB?

SOLUTION:

Here are some tips and tricks for optimizing your MongoDB deployment:

Design an efficient schema

1. When you’re designing your schema for MongoDB, think about the ways that you are going to access your data and the common access patterns. You want to take advantage of MongoDB’s flexible schema and design rich documents

2. A key question when designing a MongoDB schema is when to embed and when to link. Embedding is the nesting of objects and arrays inside a BSON document. Links are references between documents. Embedding is a bit like "prejoined" data. Operations within a document are easy for the server to handle. Links in contrast must be processed client-side by the application; the application does this by issuing a follow-up query.

Generally, for "contains" relationships between entities, embedding should be be chosen. Embedding documents when it should actually be linked would result in duplication of data.

How to optimize your MongoDB?

SOLUTION:

Here are some tips and tricks for optimizing your MongoDB deployment:

Design an efficient schema

1. When you’re designing your schema for MongoDB, think about the ways that you are going to access your data and the common access patterns. You want to take advantage of MongoDB’s flexible schema and design rich documents

2. A key question when designing a MongoDB schema is when to embed and when to link. Embedding is the nesting of objects and arrays inside a BSON document. Links are references between documents. Embedding is a bit like "prejoined" data. Operations within a document are easy for the server to handle. Links in contrast must be processed client-side by the application; the application does this by issuing a follow-up query.

Generally, for "contains" relationships between entities, embedding should be be chosen. Embedding documents when it should actually be linked would result in duplication of data.

Optimize your queries

Have slow database queries? Get more information on the performance of your queries using the explain feature. Using the mongo shell, invoke the explain() method on a cursor:

> db.collection.find(query).explain()

The result will be a document that contains the explain output:

{

"cursor" : "BasicCursor",

"indexBounds" : [ ],

"nscanned" : 57594,

"nscannedObjects" : 57594,

"nYields" : 2 ,

"n" : 3 ,

"millis" : 108,

"indexOnly" : false,

"isMultiKey" : false,

"nChunkSkips" : 0

}

Know your working set size

Retrieving data in RAM is orders of magnitude faster than disk. Think about the total database size in relation to the set of data that is active at any given point in time. If you want your queries to be fast, make sure that you have enough memory to hold your active working set in RAM.

Don’t forget your indexes. They take up memory too!

More Information

If you find this solution useful, you are welcome to press one of the ads in this page.. Thanks!

Thursday, March 21, 2013

MongoDB Deployment Strategies

PROBLEM:

Ready to deploy MongoDB? Here's what you need to know

SOLUTION:

Use Replication

Replication is the best way to ensure the safety of your data. It is recommended that you use replica sets, a form of asynchronous master/slave replication that adds automatic failover and automatic recovery of member nodes.

More information on replication:

• Replica Sets

• Best practices for replica sets on EC2

Sharding is there when you need it

If your data exceeds the resources of a single database server, MongoDB can convert to a sharded cluster for horizontal scaling across multiple nodes. With MongoDB sharding, the failover and balancing of nodes is handled automatically, with few or no changes to the original application code.

Deploying in the cloud

MongoDB was designed to run on commodity and virtualized environments. Because it does not depend on high end compute, network or storage resources, your deployment is not limited by the biggest server resources you can get in cloud environments like Amazon EC2 or Rackspace Cloud. You can add additional storage, memory and transaction capacity by adding more virtual servers to your deployment.

• Docs: Amazon EC2

• Docs: VMware Cloud Foundry

• Docs: Red Hat OpenShift

• Docs: Windows Azure

• Docs: Hosting Center

Monitoring your deployment

MongoDB includes many diagnostic commands and tools, like mongostat, the query profiler, and more. In addition the open source community has built plugins from monitoring tools like Ganglia, munin, and cacti.

In addition, 10gen offers a cloud-based monitoring and alerting solution for MongoDB deployments called MongoDB Monitoring Service (MMS). MMS is a publicly available software-as-a-service solution available at mms.10gen.com.

If you find this solution useful, you are welcome to press one of the ads in this page.. Thanks!

Ready to deploy MongoDB? Here's what you need to know

SOLUTION:

Use Replication

Replication is the best way to ensure the safety of your data. It is recommended that you use replica sets, a form of asynchronous master/slave replication that adds automatic failover and automatic recovery of member nodes.

More information on replication:

• Replica Sets

• Best practices for replica sets on EC2

Sharding is there when you need it

If your data exceeds the resources of a single database server, MongoDB can convert to a sharded cluster for horizontal scaling across multiple nodes. With MongoDB sharding, the failover and balancing of nodes is handled automatically, with few or no changes to the original application code.

Deploying in the cloud

MongoDB was designed to run on commodity and virtualized environments. Because it does not depend on high end compute, network or storage resources, your deployment is not limited by the biggest server resources you can get in cloud environments like Amazon EC2 or Rackspace Cloud. You can add additional storage, memory and transaction capacity by adding more virtual servers to your deployment.

• Docs: Amazon EC2

• Docs: VMware Cloud Foundry

• Docs: Red Hat OpenShift

• Docs: Windows Azure

• Docs: Hosting Center

Monitoring your deployment

MongoDB includes many diagnostic commands and tools, like mongostat, the query profiler, and more. In addition the open source community has built plugins from monitoring tools like Ganglia, munin, and cacti.

In addition, 10gen offers a cloud-based monitoring and alerting solution for MongoDB deployments called MongoDB Monitoring Service (MMS). MMS is a publicly available software-as-a-service solution available at mms.10gen.com.

If you find this solution useful, you are welcome to press one of the ads in this page.. Thanks!

Wednesday, March 13, 2013

Managing UNDO data in Oracle

PROBLEM:

What are the basics of Oracle undo data?

SOLUTION:

1. A.C.I.D Standard

4. Managing UNDO Data

6. Oracle Isolation Levels

If you find this solution useful, you are welcome to press one of the ads in this page.. Thanks!

What are the basics of Oracle undo data?

SOLUTION:

1. A.C.I.D Standard

- Atomicity

- Consistency

- Isolation

- Durability

- Executing a Rollback

- Executing a Commit

- DDL and Transaction Control

- The So-Called “Auto-Commit”

- Executing SQL statements

- Executing an Update Statement

- Executing Insert and Delete Statements

4. Managing UNDO Data

- Undo Parameters

- Undo Retention Guarantee (10g)

- Sizing your undo tablespace

6. Oracle Isolation Levels

--------------------------------------------------------------------------

-- ACID (aka ACID test)

--------------------------------------------------------------------------

-- In computer science, ACID (atomicity, consistency, isolation, durability) is a set of properties that guarantee database transactions are processed reliably.

-- Atomicity

-- The principle of atomicity states that all parts of a transaction must complete, or none of them.

-- For example, if your business analysts have said that every time you change an employee’s salary you must also change his grade, then the “atomic” transaction will consist of two updates. The database must guarantee that both go through, or neither.

-- Consistency

-- The principle of consistency states that the results of a query must be consistent with the state of the database at the time the query started.

-- For uncommited data - as long as user-A changing data in Table-X without applying Commit, all other users will see the "old values".

-- For commited data - Imagine a simple query that averages the value of a column of a table. If the table is large, it will take many minutes to pass through the table.

-- If other users are updating the column and commit while the query is in progress, should the query include the new or the old values? Should it include rows that were inserted or deleted after the query started?

-- Isolation

-- The principle of isolation states that an incomplete (that is, uncommitted) transaction must be invisible to the rest of the world.

-- Durability

-- The principle of durability states that once a transaction completes with a COMMIT, it must be impossible for the database to lose it.

--------------------------------------------------------------------------

-- DML & DDL - Behind the scenes

--------------------------------------------------------------------------

-- Executing a Rollback

-- If the session that initiated the transaction fails (perhaps the PC running the user process reboots, or the network link goes down), then the PMON will detect that there is a problem, and roll back the transaction.

-- If the server crashes, then on startup SMON will detect the problem and initiate a rollback. A manual rollback requires the user to issue the ROLLBACK command. But however the rollback is initiated, the mechanism is identical.

-- Oracle’s implementation of the ROLLBACK command is to use undo data to construct and execute another statement that will reverse the effect of the first statement.

-------------------------

-- Executing a Commit

-------------------------

-- When you say COMMIT, all that happens physically is that LGWR flushes the log buffer to disk. DBWn does absolutely nothing. This is one of the most important performance features of the Oracle database.

--------------------------------

-- DDL and Transaction Control

--------------------------------

-- The COMMIT and ROLLBACK statements apply only to DML. You cannot roll back a DDL statement: once executed, it is immediately durable

--------------------------------

-- The So-Called “Auto-Commit”

--------------------------------

-- Quite simply, there is no such thing as an automatic commit.

-- When you execute a DDL statement for instance, a perfectly normal COMMIT is included in the source code that implements the DDL command.

-- When you exit from your user process - If you are using SQL*Plus on a Windows terminal (never mind what operating system the database server is running) and you issue a DML statement followed by an “exit,” your transaction will be committed.

-- This is because a COMMIT statement is built into the SQL*Plus “exit” command. When you click in the top-right corner of the SQL*Plus window? The window will close, and if you log in again, you will see that the transaction has been rolled back.

-- This is because the programmers who wrote SQL*Plus for Microsoft Windows included a ROLLBACK statement in the code that is executed when you close the window.

-----------------------------

-- Executing SQL statements

-----------------------------

-- Executing a SELECT Statement

-- 1. The server process executing the statement will first check whether the blocks containing the data required are already in memory, in the database buffer cache.

-- 2. If they are, then execution can proceed immediately. If they are not, the server must locate them on disk and copy them into the database buffer cache.

-- 3. Once the data blocks required for the query are in the database buffer cache, any further processing (such as sorting or aggregation) is carried out in the PGA of the session.

-- 4. When the execution is complete, the result set is returned to the user process.

----------------------------------

-- Executing an Update Statement

----------------------------------

-- 1. The first step in executing DML is the same as executing SELECT: the required blocks must be found in memory or copied into memory from disk. The only change is that an empty block of an undo segment is needed too

-- 2. Locks must be placed on any rows that will be affected by the operation

-- 3. Then the redo is generated: the server process writes to the log buffer the changes that are going to be applied to the data blocks.

-- 4. Having generated the redo, the update is carried out in the database buffer cache:

-- A. The block of table data is updated with the new version of the changed column,

-- B. The old version of the changed column is written to the block of an undo segment.

-- 5. From this point until the update is committed, all queries from other sessions addressing the changed row will be redirected to the undo data. Only the session that is doing the update will see the actual current version of the row in the table block.

-------------------------------------------

-- Executing Insert and Delete Statements

-------------------------------------------

-- Conceptually, INSERT and DELETE are managed in the same fashion as an UPDATE.

-- Redo generation is exactly the same: all changes to be made to data are first written out to the log buffer.

-- The difference is in the amount of undo generated.

-- INSERT - When a row is inserted, the only undo generated consists of writing out the new rowid to the undo block. This is because to roll back an INSERT, the only information Oracle requires is the rowid,

-- DELETE - the whole row is written to the undo block, so that the deletion can be rolled back if need be by inserting the complete row back into the table.

--------------------------------------------------------------------------

-- Managing UNDO Data

--------------------------------------------------------------------------

-- Undo Parameters

SHOW PARAMETER undo

-- UNDO_MANAGEMENT

-- Manual - meaning that Oracle will not use undo segments at all. This is for backward compatibility, and if you use this, you will have to do a vast amount of work creating and tuning rollback segments. Don’t do it.

-- Auto - to enable automatic undo management through the use of undo segments. In this case, DBAs relinquish undo segment management to Oracle. This parameter is static.The other parameters are dynamic.

-- UNDO RETENTION

-- UNDO_RETENTION parameter specifies the amount of time in seconds that Oracle *attempts* to keep undo data available.

-- If, for example, your longest running query is thirty minutes, you would set this parameter to 1800. Oracle will then attempt to keep all undo data for at least 1800 seconds, and your query should therefore never fail with ORA-1555.

-- If, however, you do not set this parameter, or if you set it to zero, Oracle will still keep data for as long as it can anyway.

-- The algorithm controlling which expired undo data is overwritten first will always choose to overwrite the oldest bit of data; Therefore, UNDO_RETENTION is always at the maximum allowed by the size of the tablespace

-- UNDO_TABLESPACE

UNDO_TABLESPACE This parameter nominates a tablespace, which must have been created as an undo tablespace, as the active undo tablespace.

-- See Undo tablespaces:

SELECT tablespace_name, contents, retention

FROM dba_tablespaces;

-- Change retention

ALTER SYSTEM SET undo_retention = 800 ;

-- Undo Retention Guarantee (10g)

-- With the 10g release, there is an option to guarantee undo retention. This means that undo data will never be overwritten until the time specified by the UNDO_RETENTION parameters has passed.

-- Retention guarantee is enabled at the tablespace level via the Retention Guarantee clause. This attribute can be specified at undo tablespace creation time, or an undo tablespace can be altered later to enable it.

-- Once you activate an undo tablespace for which a retention guarantee has been specified, all queries will complete successfully, provided they finish within the undo retention time. You -- -- will never have “snapshot too old” errors again. The downside is that transactions may fail for lack of undo space because Oracle cannot overwrite committed undo data until the retention time has passed.

SELECT tablespace_name, contents, retention

FROM dba_tablespaces;

ALTER TABLESPACE UNDOTBS1 RETENTION GUARANTEE;

SELECT tablespace_name, contents, retention

FROM dba_tablespaces;

-- Via OEM:

-- Server => (Database Configuration) => Automatic Undo Management

-- Server => (Database Configuration) => Initialization Parameters

-------------------------------------

-- Sizing your undo tablespace

-------------------------------------

SELECT (UR * (UPS * DBS)) + (DBS * 24) AS "Bytes"

FROM (SELECT value AS UR FROM v$parameter WHERE name = 'undo_retention'),

(SELECT (SUM(undoblks)/SUM(((end_time - begin_time)*86400))) AS UPS FROM v$undostat),

(select block_size as DBS from dba_tablespaces where tablespace_name=

(select value from v$parameter where name = 'undo_tablespace'));

-- or via OEM ...

-------------------------------------

-- Undo segment management

-------------------------------------

-- active segments in the Undo tablespace

set lines 200

col program for a15

col username for a10

col osuser for a15

col sid for 999

col sr# for 999

col segment_name for a25

col tablespace_name for a15

col status for a15

SELECT segment_id, segment_name, B.status, xacts, tablespace_name

FROM DBA_ROLLBACK_SEGS, V$ROLLSTAT B

WHERE segment_id=usn;

-- for every new transaction a new undo segment will be available

update hr.employees set salary = salary + 1 ;

update hr.departments set department_name = department_name || 'xxx';

update hr.regions set region_name = region_name || 'zzz' ;

rollback

--------------------------------------------------------------------

-- Useful queries for UNDO usage

--------------------------------------------------------------------

-- Identify transaction and session:

-- In one session:

Conn hr/hr

UPDATE employees SET salary=salary;

-- In another session:

conn / as sysdba

-- See all active Undo segments:

SET LINES 120 PAGES 120

col program for a15

col username for a10

col osuser for a15

col sid for 999

col sr# for 999

col segment_name for a25

col tablespace_name for a15

col status for a15

SELECT segment_id, segment_name, tablespace_name, B.status, xacts "Active Transactions"

FROM DBA_ROLLBACK_SEGS, V$ROLLSTAT B

WHERE segment_id=usn and xacts > 0;

-- Which sessions use a specific undo segment:

SELECT username, program, osuser, sid, serial#

FROM v$session S, v$transaction T

WHERE S.taddr = T.addr ;

-- Which sessions use active undo segments:

-- If needed can kill the problematic session:

ALTER SYSTEM KILL SESSION 'sid, sr#';

--------------------------------------------------------------------------

-- DEMO - Flashback query

--------------------------------------------------------------------------

SELECT last_name, salary

FROM hr.employees;

-- Update and commit (User forgot to put a where condition, and also commited his changes...)

UPDATE hr.employees

SET salary = 1;

commit;

-- Data is changed and cannot be rolled back...

SELECT last_name, salary

FROM hr.employees;

-- Flashback Query - Select from the table as it was at a specific point in the past:

-- In this example - go back one hour:

SELECT last_name, salary

FROM hr.employees AS OF TIMESTAMP sysdate - 1/24;

-- Fix the table with a correlated Update:

UPDATE hr.employees e

SET salary = (SELECT salary from hr.employees AS OF TIMESTAMP sysdate - 1/24

WHERE employee_id = e.employee_id);

------------------------------------------------------

-- Oracle Isolation Levels

------------------------------------------------------

-- Read committed - This is the default transaction isolation level. Each query executed by a transaction sees only data that was committed before the query (not the transaction) began. An Oracle query never reads dirty (uncommitted) data.

-- Serializable - Serializable transactions see only those changes that were committed at the time the transaction began, plus those changes made by the transaction itself through INSERT, UPDATE, and DELETE statements.

-- Read-only - Read-only transactions see only those changes that were committed at the time the transaction began and do not allow INSERT, UPDATE, and DELETE statements

-- Serializable Example:

-- User A

----------

-- Default behaviour for each new transaction

ALTER SESSION SET ISOLATION_LEVEL=SERIALIZABLE;

-- or

-- Specific behaviour for the next new transaction

SET TRANSACTION ISOLATION LEVEL SERIALIZABLE;

conn hr/hr

SELECT last_name , salary

FROM employees

WHERE rownum < 11 ;

-- User B

----------

conn hr/hr

SELECT last_name , salary

FROM employees

WHERE rownum < 11 ;

UPDATE employees SET salary = 1 ;

COMMIT ;

-- USER A

----------

SELECT last_name , salary

FROM employees

WHERE rownum < 11 ;

-- Fix the table

UPDATE hr.employees e

SET salary = (SELECT salary from hr.employees AS OF TIMESTAMP sysdate - 10/1440

WHERE employee_id = e.employee_id);

----------------------------------

SET TRANSACTION ISOLATION LEVEL READ COMMITTED;

SET TRANSACTION ISOLATION LEVEL SERIALIZABLE;

SET TRANSACTION READ ONLY;

ALTER SESSION SET ISOLATION_LEVEL=SERIALIZABLE;

ALTER SESSION SET ISOLATION_LEVEL=READ COMMITTED;

If you find this solution useful, you are welcome to press one of the ads in this page.. Thanks!

Thursday, February 28, 2013

The Reality of Developer’s Life

PROBLEM:

You want to be a developer

SOLUTION:

Read this first

If you find this solution useful, you are welcome to press one of the ads in this page.. Thanks!

You want to be a developer

SOLUTION:

Read this first

If you find this solution useful, you are welcome to press one of the ads in this page.. Thanks!

Wednesday, February 27, 2013

Your first MongoDB application

PROBLEM:

What you need to know to build your first MongoDB application?

SOLUTION:

MongoDB Terminology

As you build your first application with MongoDB, you should start by knowing some basic terminology. Here are a few key terms and the equivalent concepts in a relational database:

Data as Documents

One of the major benefits of MongoDB is schema flexibility. In MongoDB, there are no predefined fields, collections can have heterogenous structure across documents, and the fields and their value datatypes can vary. For example, here is how you might model a blog post in MongoDB:

As you can see with the tags field in the example above, we have stored an array of strings within our document. And if we want to add another field to our document, we can do so easily. This facility enables you to develop applications iteratively with MongoDB.

Querying

Even though MongoDB is a NoSQL database, you can still access your data with dynamic queries. You'll find that many SQL queries translate easily to MongoDB's document-based query language.

For example, suppose we're using the MongoDB shell and want to return every document in the users collection. Our query would look like this:

db.users.find({})

In this case, our selector is an empty document, which matches every document in the collection. Here's a more selective example:

db.users.find({'last_name': 'Smith'})

Here our selector will match every document where the last_name attribute is 'Smith.'

MongoDB supports a wide array of possible document selectors. For more examples:

For more information

Interested in learning more about building applications with MongoDB? Here are a few resources to get you started:

If you find this solution useful, you are welcome to press one of the ads in this page.. Thanks!

What you need to know to build your first MongoDB application?

SOLUTION:

MongoDB Terminology

As you build your first application with MongoDB, you should start by knowing some basic terminology. Here are a few key terms and the equivalent concepts in a relational database:

| ||||||||||||

Data as Documents

One of the major benefits of MongoDB is schema flexibility. In MongoDB, there are no predefined fields, collections can have heterogenous structure across documents, and the fields and their value datatypes can vary. For example, here is how you might model a blog post in MongoDB:

{author: "meghan",

date: new Date(),

text: "MongoDB is great!",

tags: ["mongodb", "intro"]}Querying

Even though MongoDB is a NoSQL database, you can still access your data with dynamic queries. You'll find that many SQL queries translate easily to MongoDB's document-based query language.

For example, suppose we're using the MongoDB shell and want to return every document in the users collection. Our query would look like this:

db.users.find({})

In this case, our selector is an empty document, which matches every document in the collection. Here's a more selective example:

db.users.find({'last_name': 'Smith'})

Here our selector will match every document where the last_name attribute is 'Smith.'

MongoDB supports a wide array of possible document selectors. For more examples:

|

For more information

Interested in learning more about building applications with MongoDB? Here are a few resources to get you started:

- Building your first MongoDB Application

- MongoDB Schema Design: Insights and Tradeoffs from Jetlore

- Docs: MongoDB Tutorial

If you find this solution useful, you are welcome to press one of the ads in this page.. Thanks!

Union vs. Union All

PROBLEM:

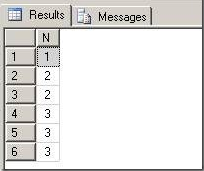

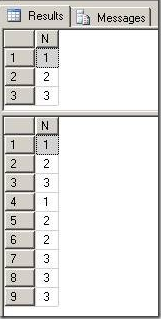

What happens when you combine the same retrieval commands UNION (performs distinct query) and UNION ALL ?

SOLUTION:

Lets create test data:

Now, lets query it:

In the first case, the UNION makes distinct query and contracted three output lines, and in the second case the UNION ALL received three lines added all six.

If you find this solution useful, you are welcome to press one of the ads in this page.. Thanks!

What happens when you combine the same retrieval commands UNION (performs distinct query) and UNION ALL ?

SOLUTION:

Lets create test data:

CREATE TABLE #T (N INT); GO INSERT INTO #T SELECT 1; INSERT INTO #T SELECT 2; INSERT INTO #T SELECT 2; INSERT INTO #T SELECT 3; INSERT INTO #T SELECT 3; INSERT INTO #T SELECT 3; GO SELECT * FROM #T;

Now, lets query it:

SELECT * FROM #T UNION ALL SELECT * FROM #T UNION SELECT * FROM #T

SELECT * FROM #T UNION SELECT * FROM #T UNION ALL SELECT * FROM #T;

In the first case, the UNION makes distinct query and contracted three output lines, and in the second case the UNION ALL received three lines added all six.

If you find this solution useful, you are welcome to press one of the ads in this page.. Thanks!

Subscribe to:

Posts (Atom)